In the last post we saw about the kernels and visualized the working of an SVM kernel function. To catch up with the happenings visit : https://codingmachinelearning.wordpress.com/2016/08/02/svm-visualizing-the-kernel-function/

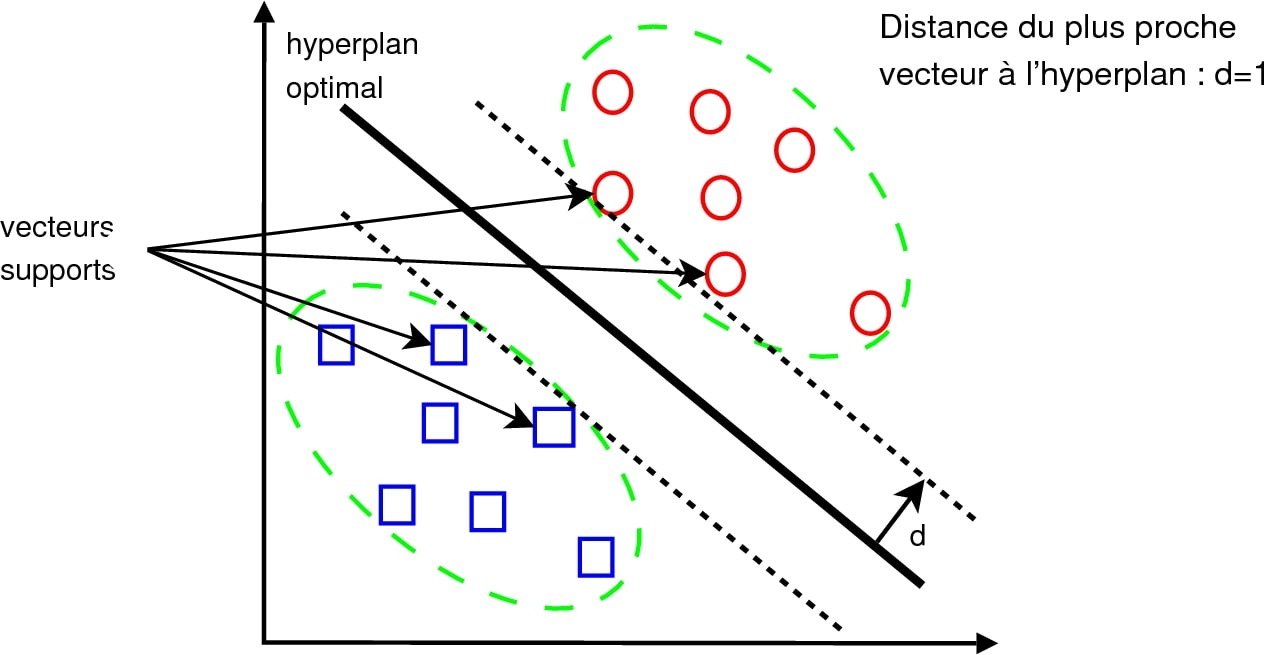

SVM: Maximum margin separating hyperplane¶. Plot the maximum margin separating hyperplane within a two-class separable dataset using a Support Vector Machine classifier with linear kernel. Lekkerkerker, 'Geometry of numbers', North-Holland (1987) pp. (iv) (Updated reprint) a2 R.T. Rockafellar, 'Convex analysis. Stack Exchange network consists of 176 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Now we know how the plot looks in 3 dimension. Now we will try to plot the hyperplane for separating this data set

- Lambda Echelon GPU HPC cluster with compute, storage, and networking. Lambda Blade GPU server with up to 10x customizable GPUs and dual Xeon or AMD EPYC processors. Hyperplane 4 GPU server with 4x Tesla V100, NVLink, and InfiniBand. Hyperplane 8 GPU server with 8x Tesla V100, NVLink, and InfiniBand. Hyperplane A100 A100 GPU server with 4 & 8 GPUs, NVLink, NVSwitch,.

- Examples of how to use “hyperplane” in a sentence from the Cambridge Dictionary Labs.

I have manually drawn the hyperplane in the above diagram. We will try to validate our intuition in this case to make sure what I claim actually happens.

Our kernel function is K(x,y)=(x, y, x2+y2 ). Now we need to plot this hyperplane that separate the points.

If we see the values in the plot generated we can see that the value for the hyper plane will be

Hypercare Project Management

- X-axis : -1.5 to 1.5 (length of the plane)

- Y-axis : -1.5 to 1.5 (breadth of the plane)

- Z-axis : .5 (height at which the plane separates red and blue)

Plot a meshgrid with these values and we can see that it matches our claim.

The code to generate the plots have been provided in my github account. Here we have manually plotted the decision boundary. Now we will try to get the same result using the scikit learn svm classifier and validate our results to see if it matches our intuition.

This is the output of using a linear kernel gives accuracy of 58%. We can see its performance is very bad trying to separate non-linear data set.

The performance of SVM on this data set using a ‘rbf’ kernel is given below. It has 100 percent classification accuracy which is stunning.

This basically is the projection of the hyper-plane on to the lower dimension. But also remember that this does use the gaussian function as its kernel function not what we defined in the beginning. Please follow the below link to read up more on gaussian kernels.

Hyperplane Definition

This wraps up our little discussion on SVM and how it works. In the next post we will look into another classifier – the logistic regression and hope to understand it better.

Code for the Plots available on my github account: https://github.com/vsuriya93/coding-machine-learning/blob/master/SVM/code.py

Hyperplane Venture Capital

Useful Links:

Hyperplanning Helha

- Gaussian Kernel : https://www.quora.com/What-is-the-intuition-behind-Gaussian-kernel-in-SVM

- SVM scikit learn: http://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html#sklearn.svm.SVC