As a powerful scripting language adapted to both fast prototypingand bigger projects, Python is widely used in web applicationdevelopment.

The Web Server Gateway Interface (or “WSGI” for short) is a standard interface between web servers and Python web application frameworks. By standardizing behavior and communication between web servers and Python web frameworks, WSGI makes it possible to write portable Python web code that can be deployed in any WSGI-compliant web server. It is one of the most fundamental libraries for web scraping. Some people use URLLIB 2.

Context¶

WSGI¶

The Web Server Gateway Interface (or “WSGI” for short) is a standardinterface between web servers and Python web application frameworks. Bystandardizing behavior and communication between web servers and Python webframeworks, WSGI makes it possible to write portable Python web code thatcan be deployed in any WSGI-compliant web server.WSGI is documented in PEP 3333.

Frameworks¶

Broadly speaking, a web framework consists of a set of libraries and a mainhandler within which you can build custom code to implement a web application(i.e. an interactive web site). Most web frameworks include patterns andutilities to accomplish at least the following:

- URL Routing

- Matches an incoming HTTP request to a particular piece of Python code tobe invoked

- Request and Response Objects

- Encapsulates the information received from or sent to a user’s browser

- Template Engine

- Allows for separating Python code implementing an application’s logic fromthe HTML (or other) output that it produces

- Development Web Server

- Runs an HTTP server on development machines to enable rapid development;often automatically reloads server-side code when files are updated

Django¶

Django is a “batteries included” webapplication framework, and is an excellent choice for creating content-orientedwebsites. By providing many utilities and patterns out of the box, Django aimsto make it possible to build complex, database-backed web applications quickly,while encouraging best practices in code written using it.

Django has a large and active community, and many pre-built re-usablemodules that can be incorporated into a newproject as-is, or customized to fit your needs.

There are annual Django conferences in the United States, Europe, and Australia.

The majority of new Python web applications today are built with Django.

Flask¶

Flask is a “microframework” for Python, and isan excellent choice for building smaller applications, APIs, and web services.

Building an app with Flask is a lot like writing standard Python modules,except some functions have routes attached to them. It’s really beautiful.

Rather than aiming to provide everything you could possibly need, Flaskimplements the most commonly-used core components of a web applicationframework, like URL routing, request and response objects, and templates.

If you use Flask, it is up to you to choose other components for yourapplication, if any. For example, database access or form generation andvalidation are not built-in functions of Flask.

This is great, because many web applications don’t need those features.For those that do, there are manyExtensions available that maysuit your needs. Or, you can easily use any library you want yourself!

Flask is default choice for any Python web application that isn’t a goodfit for Django.

Falcon¶

Falcon is a good choice when your goal isto build RESTful API microservices that are fast and scalable.

It is a reliable, high-performance Python web framework for building large-scaleapp backends and microservices. Falcon encourages the REST architectural style ofmapping URIs to resources, trying to do as little as possible while remaining highly effective.

Falcon highlights four main focuses: speed, reliability, flexibility, and debuggability.It implements HTTP through “responders” such as on_get(), on_put(), etc.These responders receive intuitive request and response objects.

Tornado¶

Tornado is an asynchronous web frameworkfor Python that has its own event loop. This allows it to natively supportWebSockets, for example. Well-written Tornado applications are known tohave excellent performance characteristics.

I do not recommend using Tornado unless you think you need it.

Pyramid¶

Pyramid is a very flexible framework with a heavyfocus on modularity. It comes with a small number of libraries (“batteries”)built-in, and encourages users to extend its base functionality. A set ofprovided cookiecutter templates helps making new project decisions for users.It powers one of the most important parts of python infrastructurePyPI.

Pyramid does not have a large user base, unlike Django and Flask. It’s acapable framework, but not a very popular choice for new Python webapplications today.

Masonite¶

Masonite is a modern and developer centric, “batteries included”, web framework.

The Masonite framework follows the MVC (Model-View-Controller) architecture pattern and is heavily inspired by frameworks such as Rails and Laravel, so if you are coming to Python from a Ruby or PHP background then you will feel right at home!

Masonite comes with a lot of functionality out of the box including a powerful IOC container with auto resolving dependency injection, craft command line tools, and the Orator active record style ORM.

Masonite is perfect for beginners or experienced developers alike and works hard to be fast and easy from install through to deployment. Try it once and you’ll fall in love.

FastAPI¶

FastAPI is a modern web framework for buildingAPIs with Python 3.6+.

It has very high performance as it is based on Starletteand Pydantic.

FastAPI takes advantage of standard Python type declarations in function parametersto declare request parameters and bodies, perform data conversion (serialization,parsing), data validation, and automatic API documentation with OpenAPI 3(including JSON Schema).

It includes tools and utilities for security and authentication (including OAuth2 with JWTtokens), a dependency injection system, automatic generation of interactive APIdocumentation, and other features.

Web Servers¶

Nginx¶

Nginx (pronounced “engine-x”) is a web server andreverse-proxy for HTTP, SMTP, and other protocols. It is known for itshigh performance, relative simplicity, and compatibility with manyapplication servers (like WSGI servers). It also includes handy featureslike load-balancing, basic authentication, streaming, and others. Designedto serve high-load websites, Nginx is gradually becoming quite popular.

WSGI Servers¶

Stand-alone WSGI servers typically use less resources than traditional webservers and provide top performance [1].

Gunicorn¶

Gunicorn (Green Unicorn) is a pure-Python WSGIserver used to serve Python applications. Unlike other Python web servers,it has a thoughtful user interface, and is extremely easy to use andconfigure.

Gunicorn has sane and reasonable defaults for configurations. However, someother servers, like uWSGI, are tremendously more customizable, and therefore,are much more difficult to effectively use.

Gunicorn is the recommended choice for new Python web applications today.

Waitress¶

Waitress is a pure-Python WSGI serverthat claims “very acceptable performance”. Its documentation is not verydetailed, but it does offer some nice functionality that Gunicorn doesn’t have(e.g. HTTP request buffering).

Waitress is gaining popularity within the Python web development community.

uWSGI¶

uWSGI is a full stack for buildinghosting services. In addition to process management, process monitoring,and other functionality, uWSGI acts as an application server for variousprogramming languages and protocols – including Python and WSGI. uWSGI caneither be run as a stand-alone web router, or be run behind a full webserver (such as Nginx or Apache). In the latter case, a web server canconfigure uWSGI and an application’s operation over theuwsgi protocol.uWSGI’s web server support allows for dynamically configuringPython, passing environment variables, and further tuning. For full details,see uWSGI magicvariables.

I do not recommend using uWSGI unless you know why you need it.

Server Best Practices¶

The majority of self-hosted Python applications today are hosted with a WSGIserver such as Gunicorn, either directly or behind alightweight web server such as nginx.

Python Web Scraping Library

The WSGI servers serve the Python applications while the web server handlestasks better suited for it such as static file serving, request routing, DDoSprotection, and basic authentication.

Hosting¶

Platform-as-a-Service (PaaS) is a type of cloud computing infrastructurewhich abstracts and manages infrastructure, routing, and scaling of webapplications. When using a PaaS, application developers can focus on writingapplication code rather than needing to be concerned with deploymentdetails.

Heroku¶

Heroku offers first-class support forPython 2.7–3.5 applications.

Heroku supports all types of Python web applications, servers, and frameworks.Applications can be developed on Heroku for free. Once your application isready for production, you can upgrade to a Hobby or Professional application.

Heroku maintains detailed articleson using Python with Heroku, as well as step-by-step instructions onhow to set up your first application.

Heroku is the recommended PaaS for deploying Python web applications today.

Templating¶

Most WSGI applications are responding to HTTP requests to serve content in HTMLor other markup languages. Instead of directly generating textual content fromPython, the concept of separation of concerns advises us to use templates. Atemplate engine manages a suite of template files, with a system of hierarchyand inclusion to avoid unnecessary repetition, and is in charge of rendering(generating) the actual content, filling the static content of the templateswith the dynamic content generated by the application.

As template files aresometimes written by designers or front-end developers, it can be difficult tohandle increasing complexity.

Some general good practices apply to the part of the application passingdynamic content to the template engine, and to the templates themselves.

- Template files should be passed only the dynamiccontent that is needed for rendering the template. Avoidthe temptation to pass additional content “just in case”:it is easier to add some missing variable when needed than to removea likely unused variable later.

- Many template engines allow for complex statementsor assignments in the template itself, and manyallow some Python code to be evaluated in thetemplates. This convenience can lead to uncontrolledincrease in complexity, and often make it harder to find bugs.

- It is often necessary to mix JavaScript templates withHTML templates. A sane approach to this design is to isolatethe parts where the HTML template passes some variable contentto the JavaScript code.

Jinja2¶

Jinja2 is a very well-regarded template engine.

It uses a text-based template language and can thus be used to generate anytype of markup, not just HTML. It allows customization of filters, tags, tests,and globals. It features many improvements over Django’s templating system.

Here some important HTML tags in Jinja2:

Python Web Scraping Tutorial

The next listings are an example of a web site in combination with the Tornadoweb server. Tornado is not very complicated to use.

The base.html file can be used as base for all site pages which arefor example implemented in the content block.

The next listing is our site page (site.html) loaded in the Pythonapp which extends base.html. The content block is automatically setinto the corresponding block in the base.html page.

Jinja2 is the recommended templating library for new Python web applications.

Chameleon¶

Chameleon Page Templates are an HTML/XML templateengine implementation of the Template Attribute Language (TAL),TAL Expression Syntax (TALES),and Macro Expansion TAL (Metal) syntaxes.

Chameleon is available for Python 2.5 and up (including 3.x and PyPy), andis commonly used by the Pyramid Framework.

Page Templates add within your document structure special element attributesand text markup. Using a set of simple language constructs, you control thedocument flow, element repetition, text replacement, and translation. Becauseof the attribute-based syntax, unrendered page templates are valid HTML and canbe viewed in a browser and even edited in WYSIWYG editors. This can makeround-trip collaboration with designers and prototyping with static files in abrowser easier.

The basic TAL language is simple enough to grasp from an example:

The <span tal:replace=”expression” /> pattern for text insertion is commonenough that if you do not require strict validity in your unrendered templates,you can replace it with a more terse and readable syntax that uses the pattern${expression}, as follows:

But keep in mind that the full <span tal:replace=”expression”>Default Text</span>syntax also allows for default content in the unrendered template.

Being from the Pyramid world, Chameleon is not widely used.

Mako¶

Mako is a template language that compiles to Pythonfor maximum performance. Its syntax and API are borrowed from the best parts of othertemplating languages like Django and Jinja2 templates. It is the default templatelanguage included with the Pylons and Pyramid webframeworks.

An example template in Mako looks like:

To render a very basic template, you can do the following:

Mako is well respected within the Python web community.

References

| [1] | Benchmark of Python WSGI Servers |

Web crawling is a powerful technique to collect data from the web by finding all the URLs for one or multiple domains. Python has several popular web crawling libraries and frameworks.

In this article, we will first introduce different crawling strategies and use cases. Then we will build a simple web crawler from scratch in Python using two libraries: requests and Beautiful Soup. Next, we will see why it’s better to use a web crawling framework like Scrapy. Finally, we will build an example crawler with Scrapy to collect film metadata from IMDb and see how Scrapy scales to websites with several million pages.

What is a web crawler?

Web crawling and web scraping are two different but related concepts. Web crawling is a component of web scraping, the crawler logic finds URLs to be processed by the scraper code.

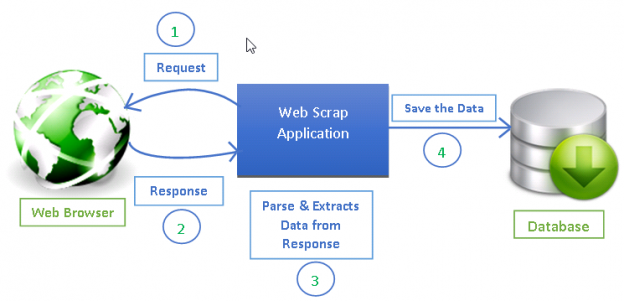

A web crawler starts with a list of URLs to visit, called the seed. For each URL, the crawler finds links in the HTML, filters those links based on some criteria and adds the new links to a queue. All the HTML or some specific information is extracted to be processed by a different pipeline.

Web crawling strategies

In practice, web crawlers only visit a subset of pages depending on the crawler budget, which can be a maximum number of pages per domain, depth or execution time.

Web Scraping Framework Python Examples

Most popular websites provide a robots.txt file to indicate which areas of the website are disallowed to crawl by each user agent. The opposite of the robots file is the sitemap.xml file, that lists the pages that can be crawled.

Popular web crawler use cases include:

- Search engines (Googlebot, Bingbot, Yandex Bot…) collect all the HTML for a significant part of the Web. This data is indexed to make it searchable.

- SEO analytics tools on top of collecting the HTML also collect metadata like the response time, response status to detect broken pages and the links between different domains to collect backlinks.

- Price monitoring tools crawl e-commerce websites to find product pages and extract metadata, notably the price. Product pages are then periodically revisited.

- Common Crawl maintains an open repository of web crawl data. For example, the archive from October 2020 contains 2.71 billion web pages.

Next, we will compare three different strategies for building a web crawler in Python. First, using only standard libraries, then third party libraries for making HTTP requests and parsing HTML and finally, a web crawling framework.

Building a simple web crawler in Python from scratch

To build a simple web crawler in Python we need at least one library to download the HTML from a URL and an HTML parsing library to extract links. Python provides standard libraries urllib for making HTTP requests and html.parser for parsing HTML. An example Python crawler built only with standard libraries can be found on Github.

The standard Python libraries for requests and HTML parsing are not very developer-friendly. Other popular libraries like requests, branded as HTTP for humans, and Beautiful Soup provide a better developer experience. You can install the two libraries locally.

A basic crawler can be built following the previous architecture diagram.

The code above defines a Crawler class with helper methods to download_url using the requests library, get_linked_urls using the Beautiful Soup library and add_url_to_visit to filter URLs. The URLs to visit and the visited URLs are stored in two separate lists. You can run the crawler on your terminal.

The crawler logs one line for each visited URL.

The code is very simple but there are many performance and usability issues to solve before successfully crawling a complete website.

- The crawler is slow and supports no parallelism. As can be seen from the timestamps, it takes about one second to crawl each URL. Each time the crawler makes a request it waits for the request to be resolved and no work is done in between.

- The download URL logic has no retry mechanism, the URL queue is not a real queue and not very efficient with a high number of URLs.

- The link extraction logic doesn’t support standardizing URLs by removing URL query string parameters, doesn’t handle URLs starting with #, doesn’t support filtering URLs by domain or filtering out requests to static files.

- The crawler doesn’t identify itself and ignores the robots.txt file.

Next, we will see how Scrapy provides all these functionalities and makes it easy to extend for your custom crawls.

Web crawling with Scrapy

Scrapy is the most popular web scraping and crawling Python framework with 40k stars on Github. One of the advantages of Scrapy is that requests are scheduled and handled asynchronously. This means that Scrapy can send another request before the previous one is completed or do some other work in between. Scrapy can handle many concurrent requests but can also be configured to respect the websites with custom settings, as we’ll see later.

Scrapy has a multi-component architecture. Normally, you will implement at least two different classes: Spider and Pipeline. Web scraping can be thought of as an ETL where you extract data from the web and load it to your own storage. Spiders extract the data and pipelines load it into the storage. Transformation can happen both in spiders and pipelines, but I recommend that you set a custom Scrapy pipeline to transform each item independently of each other. This way, failing to process an item has no effect on other items.

On top of all that, you can add spider and downloader middlewares in between components as it can be seen in the diagram below.

Scrapy Architecture Overview [source]

If you have used Scrapy before, you know that a web scraper is defined as a class that inherits from the base Spider class and implements a parse method to handle each response. If you are new to Scrapy, you can read this article for easy scraping with Scrapy.

Scrapy also provides several generic spider classes: CrawlSpider, XMLFeedSpider, CSVFeedSpider and SitemapSpider. The CrawlSpider class inherits from the base Spider class and provides an extra rules attribute to define how to crawl a website. Each rule uses a LinkExtractor to specify which links are extracted from each page. Next, we will see how to use each one of them by building a crawler for IMDb, the Internet Movie Database.

Building an example Scrapy crawler for IMDb

Before trying to crawl IMDb, I checked IMDb robots.txt file to see which URL paths are allowed. The robots file only disallows 26 paths for all user-agents. Scrapy reads the robots.txt file beforehand and respects it when the ROBOTSTXT_OBEY setting is set to true. This is the case for all projects generated with the Scrapy command startproject.

This command creates a new project with the default Scrapy project folder structure.

Then you can create a spider in scrapy_crawler/spiders/imdb.py with a rule to extract all links.

You can launch the crawler in the terminal.

You will get lots of logs, including one log for each request. Exploring the logs I noticed that even if we set allowed_domains to only crawl web pages under https://www.imdb.com, there were requests to external domains, such as amazon.com.

IMDb redirects from URLs paths under whitelist-offsite and whitelist to external domains. There is an open Scrapy Github issue that shows that external URLs don’t get filtered out when the OffsiteMiddleware is applied before the RedirectMiddleware. To fix this issue, we can configure the link extractor to deny URLs starting with two regular expressions.

Rule and LinkExtractor classes support several arguments to filter out URLs. For example, you can ignore specific URL extensions and reduce the number of duplicate URLs by sorting query strings. If you don’t find a specific argument for your use case you can pass a custom function to process_links in LinkExtractor or process_values in Rule.

For example, IMDb has two different URLs with the same content.

To limit the number of crawled URLs, we can remove all query strings from URLs with the url_query_cleaner function from the w3lib library and use it in process_links.

Now that we have limited the number of requests to process, we can add a parse_item method to extract data from each page and pass it to a pipeline to store it. For example, we can either extract the whole response.text to process it in a different pipeline or select the HTML metadata. To select the HTML metadata in the header tag we can code our own XPATHs but I find it better to use a library, extruct, that extracts all metadata from an HTML page. You can install it with pip install extract.

I set the follow attribute to True so that Scrapy still follows all links from each response even if we provided a custom parse method. I also configured extruct to extract only Open Graph metadata and JSON-LD, a popular method for encoding linked data using JSON in the Web, used by IMDb. You can run the crawler and store items in JSON lines format to a file.

The output file imdb.jl contains one line for each crawled item. For example, the extracted Open Graph metadata for a movie taken from the <meta> tags in the HTML looks like this.

The JSON-LD for a single item is too long to be included in the article, here is a sample of what Scrapy extracts from the <script type='application/ld+json'> tag.

Exploring the logs, I noticed another common issue with crawlers. By sequentially clicking on filters, the crawler generates URLs with the same content, only that the filters were applied in a different order.

Long filter and search URLs is a difficult problem that can be partially solved by limiting the length of URLs with a Scrapy setting, URLLENGTH_LIMIT.

I used IMDb as an example to show the basics of building a web crawler in Python. I didn’t let the crawler run for long as I didn’t have a specific use case for the data. In case you need specific data from IMDb, you can check the IMDb Datasets project that provides a daily export of IMDb data and IMDbPY, a Python package for retrieving and managing the data.

Web crawling at scale

If you attempt to crawl a big website like IMDb, with over 45M pages based on Google, it’s important to crawl responsibly by configuring the following settings. You can identify your crawler and provide contact details in the BOT_NAME setting. To limit the pressure you put on the website servers you can increase the DOWNLOAD_DELAY, limit the CONCURRENT_REQUESTS_PER_DOMAIN or set AUTOTHROTTLE_ENABLED that will adapt those settings dynamically based on the response times from the server.

Notice that Scrapy crawls are optimized for a single domain by default. If you are crawling multiple domains check these settings to optimize for broad crawls, including changing the default crawl order from depth-first to breath-first. To limit your crawl budget, you can limit the number of requests with the CLOSESPIDER_PAGECOUNT setting of the close spider extension.

With the default settings, Scrapy crawls about 600 pages per minute for a website like IMDb. To crawl 45M pages it will take more than 50 days for a single robot. If you need to crawl multiple websites it can be better to launch separate crawlers for each big website or group of websites. If you are interested in distributed web crawls, you can read how a developer crawled 250M pages with Python in 40 hours using 20 Amazon EC2 machine instances.

In some cases, you may run into websites that require you to execute JavaScript code to render all the HTML. Fail to do so, and you may not collect all links on the website. Because nowadays it’s very common for websites to render content dynamically in the browser I wrote a Scrapy middleware for rendering JavaScript pages using ScrapingBee’s API.

Conclusion

We compared the code of a Python crawler using third-party libraries for downloading URLs and parsing HTML with a crawler built using a popular web crawling framework. Scrapy is a very performant web crawling framework and it’s easy to extend with your custom code. But you need to know all the places where you can hook your own code and the settings for each component.

Configuring Scrapy properly becomes even more important when crawling websites with millions of pages. If you want to learn more about web crawling I suggest that you pick a popular website and try to crawl it. You will definitely run into new issues, which makes the topic fascinating!

Sources